|

I am a PhD student jointly enrolled at Westlake University and Zhejiang University, in the Inception3D Lab, co-supervised by Anpei Chen and Andreas Geiger. I am fortunate to work with Yuliang Xiu at Endless AI Lab and interned at Tencent AI Lab, collaborating with Xuan Wang and Qi Zhang. I got my M.S. from Xi'an Jiaotong University and my B.S. from Chongqing University. My research topics include computer vision, machine learning, and computer graphics, specifically in spatial intelligence. Email | Google Scholar | GitHub | Twitter | Bluesky |

|

|

The world we see is constantly changing: how do intelligent systems generalize to new observations? This question led me to quest for an understanding of the mechanisms underlying spatial intelligence and to develop methods for enabling artificial intelligence with this remarkable capability. Specifically, I am investigating how generalizability can emerge from reusable 3D & 4D representations, how these representations of the dynamic 3D world could be learned from images & videos, and how inductive biases could serve as expert knowledge to reduce unknown parameters and make learning more efficient. Equal Contribution *, Corresponding Author †, Project Lead ⚑ |

|

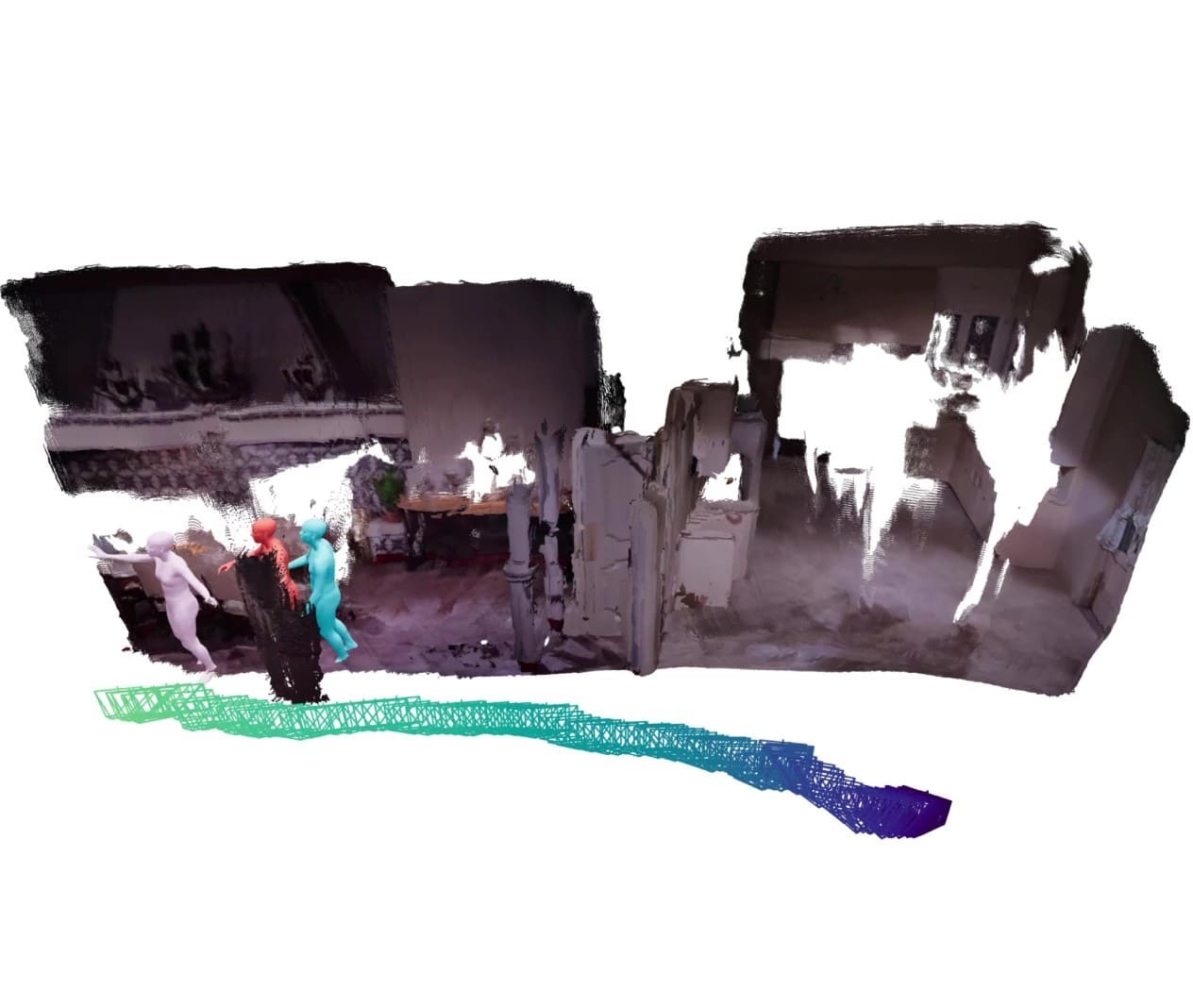

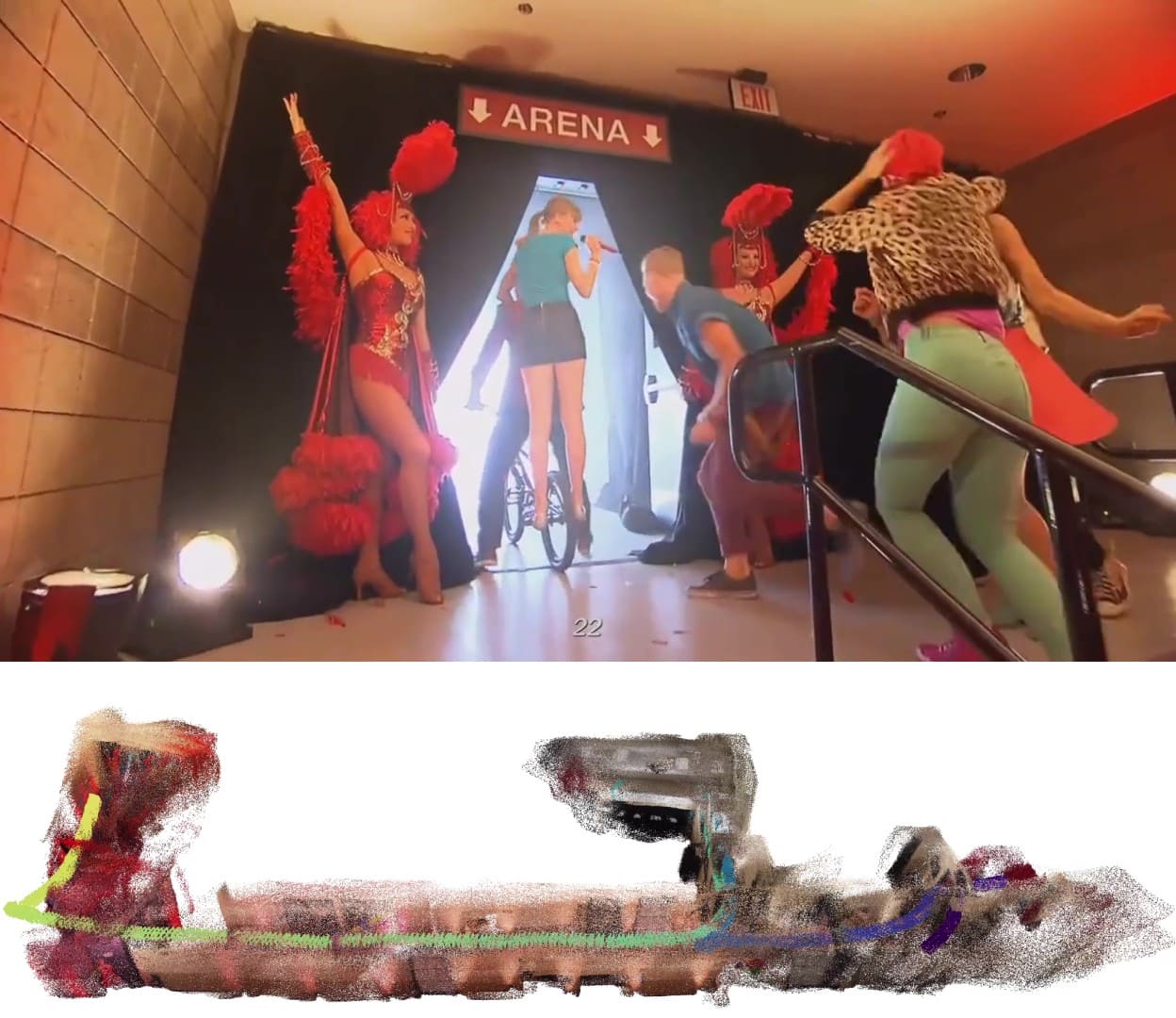

Yue Chen, Xingyu Chen⚑, Yuxuan Xue, Anpei Chen, Yuliang Xiu†, Gerard Pons-Moll arXiv, 2025 project page / arXiv / code / interactive demo Online human-scene reconstruction in One model, One stage. |

|

Xingyu Chen, Yue Chen, Yuliang Xiu, Andreas Geiger, Anpei Chen† arXiv, 2025 project page / arXiv / code A simple state update rule to enhance length generalization for CUT3R. |

|

Xingyu Chen, Yue Chen, Yuliang Xiu, Andreas Geiger, Anpei Chen† IEEE/CVF International Conference on Computer Vision (ICCV), 2025 project page / arXiv / code / interactive demo Easi3R adapts DUSt3R for 4D reconstruction by disentangling and repurposing its attention maps, making 4D reconstruction easier than ever! |

|

Yue Chen, Xingyu Chen, Anpei Chen, Gerard Pons-Moll, Yuliang Xiu† IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025 project page / arXiv / video / code / demo / gallery A unified framework to probe "texture and geometry awareness" of visual foundation models. Novel view synthesis serves as an effective proxy for 3D evaluation. |

|

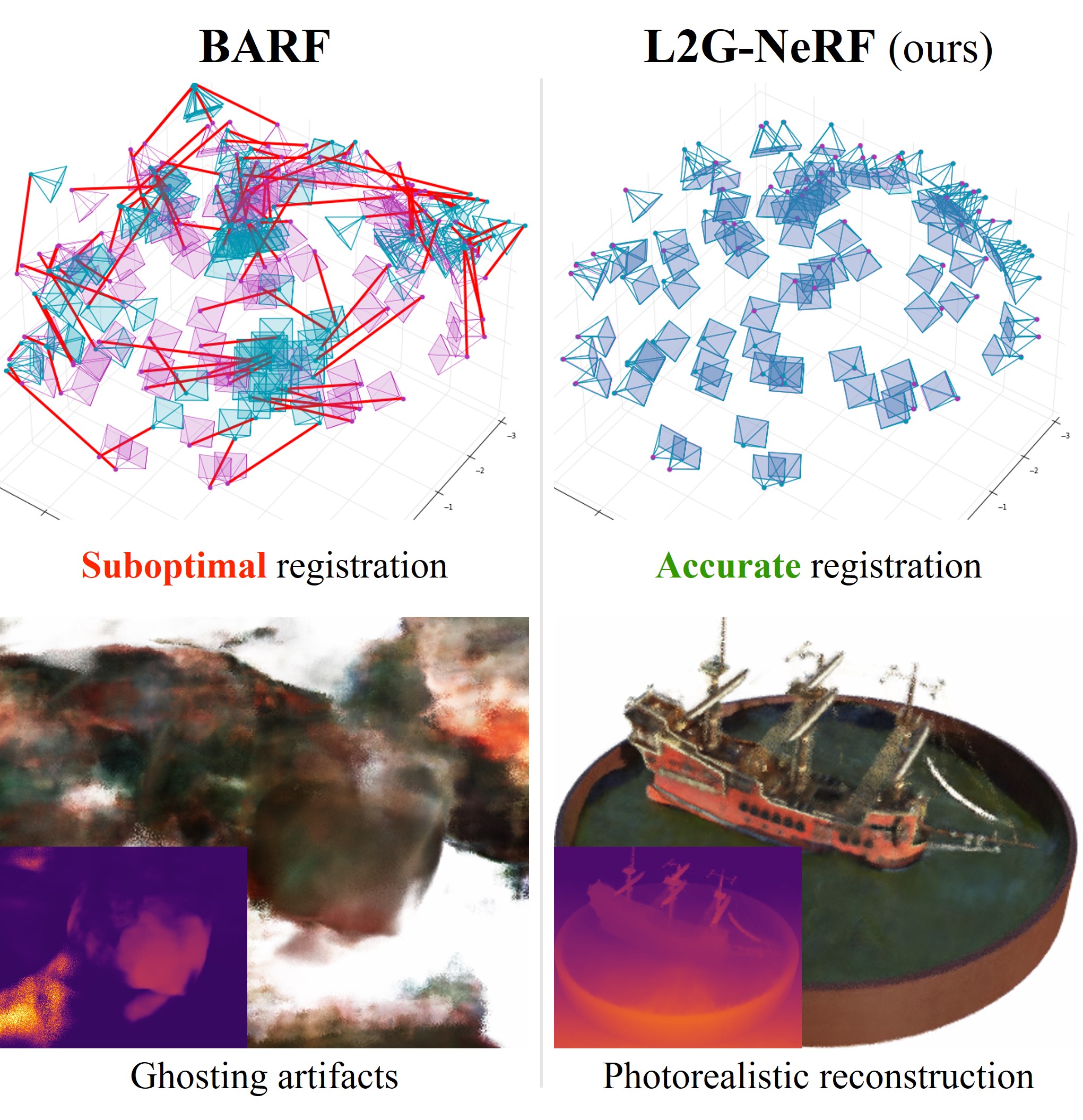

Yue Chen*, Xingyu Chen*⚑, Xuan Wang†, Qi Zhang, Yu Guo†, Ying Shan, Fei Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 project page / arXiv / paper / code / supplementary / video / poster We combine local and global alignment via differentiable parameter estimation solvers to achieve robust bundle-adjusting Neural Radiance Fields. |

|

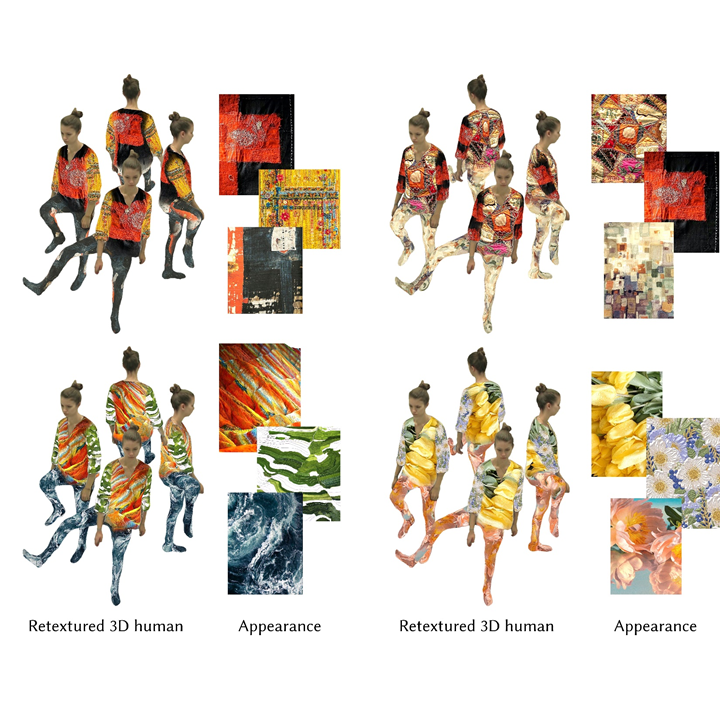

Yue Chen*, Xuan Wang*, Xingyu Chen, Qi Zhang, Xiaoyu Li, Yu Guo†, Jue Wang, Fei Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 project page / arXiv / paper / code / supplementary / video / poster We separate high-frequency human appearance from 3D volume and encode them into a 2D texture, which enables real-time rendering and retexturing. |

|

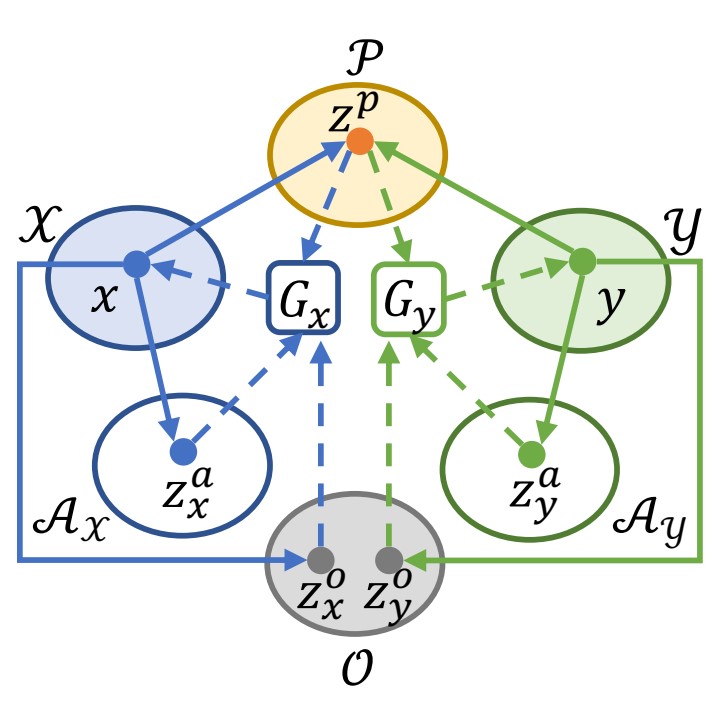

Yue Chen, Xingyu Chen†⚑, Yicen Li IEEE International Conference on Robotics and Automation (ICRA), 2023 arXiv / paper / code / video / poster We decompose the image representation into place, appearance, and occlusion code and use the place code as a descriptor to retrieve images. |

|

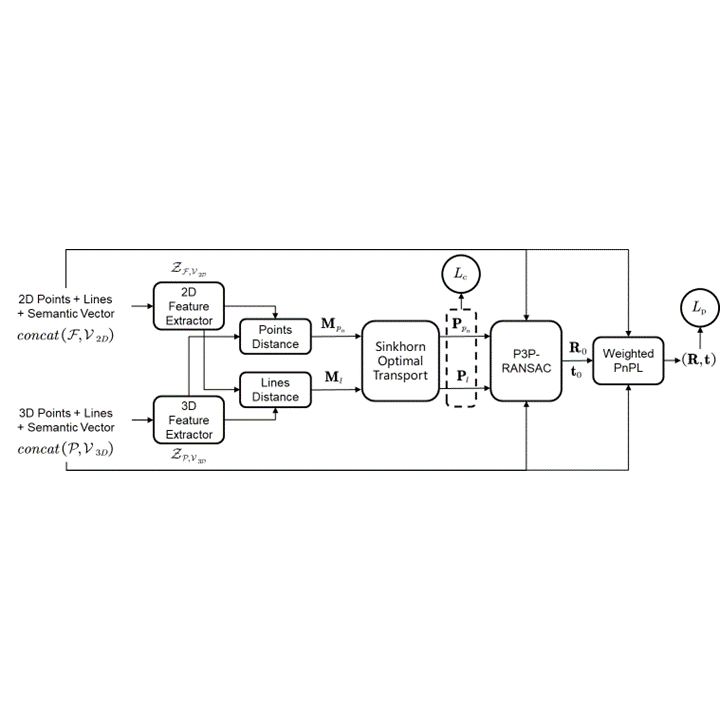

Xingyu Chen, Jianru Xue†, Shanmin Pang IEEE Robotics and Automation Letters (RA-L), 2022 arXiv / paper Given a sparse semantic map (e.g., pole lines, traffic sign midpoints), we estimate camera poses by learning 2D-3D point-line correspondences. |

|

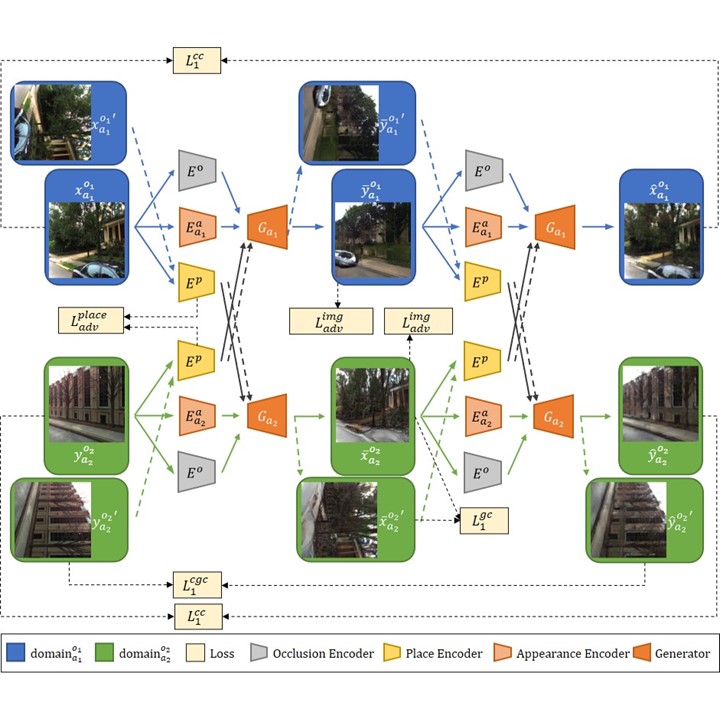

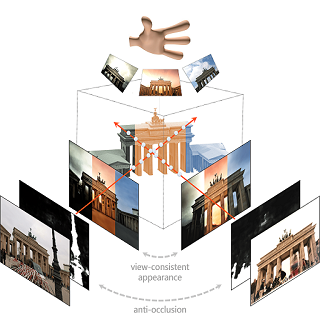

Xingyu Chen, Qi Zhang†, Xiaoyu Li, Yue Chen, Ying Feng, Xuan Wang, Jue Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022 project page / arXiv / paper / supplementary / code / video / poster We recover NeRF from tourism images with variable appearance and occlusions, and consistently render free-occlusion views with hallucinated appearances. |

|

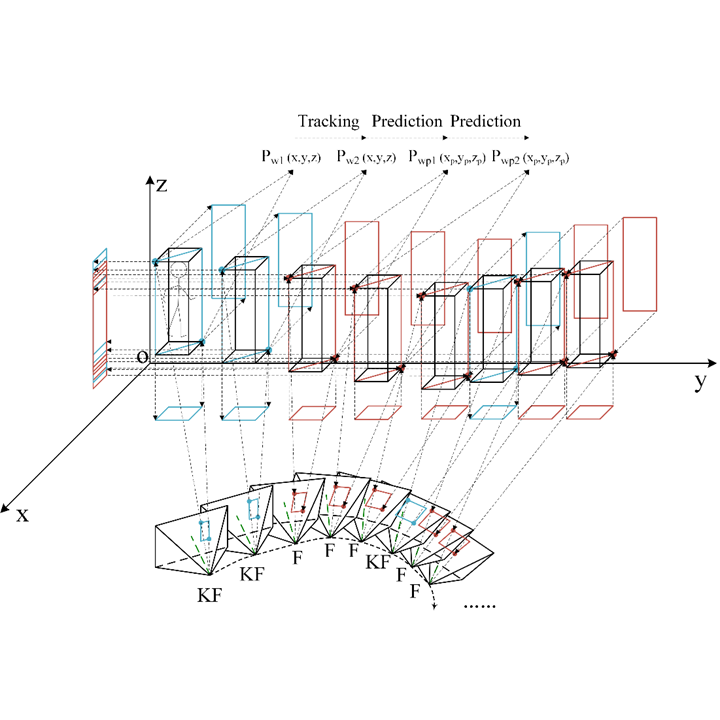

Xingyu Chen, Jianru Xue†, Jianwu Fang, Yuxin Pan, Nanning Zheng IEEE Intelligent Vehicles Symposium (IV), 2020 arXiv / paper Instead of detecting objects in all frames, we detect only keyframes and embed an efficient prediction mechanism in other frames to find dynamic objects. |

|

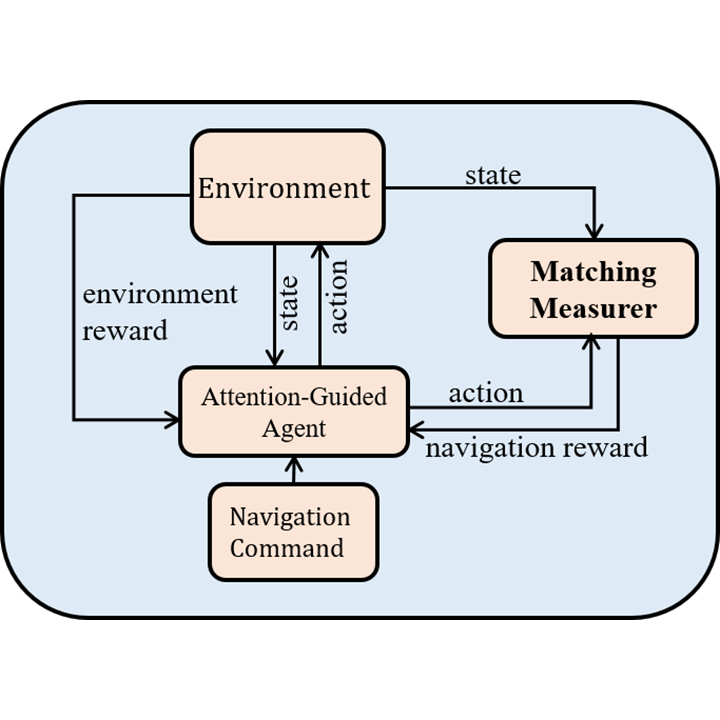

Yuxin Pan, Jianru Xue†, Pengfei Zhang, Wanli Ouyang, Jianwu Fang, Xingyu Chen IEEE International Conference on Robotics and Automation (ICRA), 2020 ResearchGate / paper We propose a navigation command matching model to discriminate actions generated from sub-optimal policies via smooth rewards. |

|

I am passionate about bridging the physical and digital worlds by building next-generation AR and robotics. |

|

|

GPS-Denied Navigation

|

|

|

Hand gesture recognition

|

|

|

Teleoperation

|

|

|

|

ETH Zurich, 2023 Sharing the intuition of dealing with dynamic objects in our previous work and give a prospect of handling the tracking problem via neural fields. |

|

Neural Radiance Fields for Unconstrained Photo Collections 深蓝学院 (Shenlan College online education), 2022 Introduction about Neural Radiance Fields (NeRF) for unconstrained photo collections. Including NeRF, NeRF in the Wild, and Ha-NeRF |

|

|

template adapted from this awesome website

|